Published Date

Photos by Alex Matthews/Qualcomm Institute and Erik Jepsen/UC San Diego Publications

Tackling Changes and Challenges With Robotics

More than 200 engineers and scientists take part in Contextual Robotics Institute Forum on Campus

An increasing number of self-driving cars and delivery drones. An aging, and sometimes ailing, population. More complex and automated factories. These are just some of the changes coming to the United States in the next decades. Friday, more than 200 engineers and social scientists, from industry and the university, came together on campus to discuss how robotics could help tackle the challenges that these changes will no doubt bring.

They were taking part in the University of California San Diego Contextual Robotics Institute’s third annual forum. The one-day event featured talks by world leaders on developing robotics for the benefit of society. This year’s theme was “Shared Autonomy: New Directions in Human-Machine Interaction.”

The forum has grown over the years and reflects the increased importance of robotics in the San Diego region, said Albert P. Pisano, dean of the Jacobs School of Engineering at UC San Diego. This year’s event featured talks by top researchers at Qualcomm, Facebook, Nissan, Toyota and Leidos, as well as UC San Diego. “We are building right here in real time before your eyes a world-class robotics cluster in San Diego and the greater CaliBaja region,” Pisano said Friday.

At the center of it all is UC San Diego’s Contextual Robotics Institute, a partnership between the Jacobs School of Engineering and the Division of Social Sciences, which now includes more than 60 faculty members. This is an unusual and dynamic alliance, pointed out Carol Padden, dean of the Division of Social Sciences at UC San Diego. “We study how humans behave in the presence of intelligent machines,” she said. “One of the key questions we ask is what makes machines compelling? Why should machines be social? How should people interact with intelligent machines?”

These questions will have to be answered as robots, from self-driving cars to helpful companions, turn up in our everyday lives. “We want to make sure we think about how new technologies are going to impact people’s everyday life,” said Henrik I. Christensen, director of the Contextual Robotics Institute and a professor of computer science at UC San Diego.

Henrik I. Christensen is the director of the Contextual Robotics Institute at UC San Diego and the lead editor of the U.S. Robotics Roadmap.

Christensen is the lead editor of the 2016 U.S. Robotics Roadmap, which aims to determine how researchers can make a difference and solve societal problems in the United States. He gave a preview of the roadmap—which is sponsored by the National Science Foundation, UC San Diego, Oregon State University and Georgia Institute of Technology—during his talk at the forum. The document provides an overview of robotics in a wide range of areas, from manufacturing to consumer services, healthcare, autonomous vehicles and defense. The roadmap’s authors make recommendation to ensure that the United States will continue to lead in the field of robotics, both in terms of research innovation, technology and policies. He published the latest edition of the roadmap this week, ahead of the 2016 presidential elections.

Christensen also discussed the impact of robotics on manufacturing and the need for a national shared robotics infrastructure.

“We want to make sure that research solves real-life problems and gets deployed,” Christensen said. “We need to make sure that we are making an impact on people’s lives.”

Self-driving cars and policy

One section of the roadmap examines the huge growth and advances in the field of self-driving cars. All big auto manufacturers today have units working on self-driving cars, Christensen said. “I believe that kids born today will never learn to drive a car,” he said. And industry experts are even more bullish, he pointed out.

But autonomous vehicles still have several obstacles to overcome. “It is important to recognize that human drivers have a performance of 100 million miles driven between fatal accidents,” Christensen said. “It is far from trivial to design autonomous systems that have a similar performance.”

In addition, local, state and federal agencies need to formulate policies and regulations that ensure these cars can share the road safely with vehicles driven by people. This is also an issue for unmanned aerial vehicles, better known as drones or UAVs.

UAVs in swarms or otherwise

Researchers also need to get better at controlling swarms of UAVs and robots. Currently, it takes a small group of people to run complex UAVs. This ratio needs to be inverted so that one person can control a small group of UAVs and other autonomous robots.

“Human-robot interactions should resemble the relationship between an orchestra conductor and musicians,” Christensen said. “Individual players need to be smart enough to take cues from the conductor and play on their own.”

During Friday’s forum, Jorge Cortes, a professor of mechanical engineering at the Jacobs School, showed how complex algorithms his team is developing allow a single person equipped with a tablet to control a swarm of roving robots.

More than 200 people registered for the event.

Health care and home companion robots

Christensen also predicted that a major wave of companion robots is about to enter the market, as the population of developed countries ages. For example, 50 percent of the Japanese population is over 50 years old. “We need to help the elderly stay in their homes,” Christensen said. “And robots can help us get there.”

The development of robots in the field of health care and especially eldercare needs to be a moonshot for this generation of roboticists, Christensen said.

Christensen’s ideal home care robot should function both as a walker and a wheelchair. It should be equipped with arms, be able to fetch medications and even prepare a meal. To reach this goal, robots will need to have a better understanding of their surroundings and become more reliable. It is also essential that robots be easy enough to control so that everyone can use them. That means that home care robots, for example, need user interfaces that are simple and intuitive.

Intuitive does not necessarily mean making robots human-like, said Ayse Saygin. An associate professor in the Department of Cognitive Science and director of the Cognitive Neuroscience and Neuropsychology Lab, Saygin said robots that look like humans often have the unintended effect of being creepy or scary. She discussed her research on the “uncanny valley,” showing that agreement between motion and appearance is what our brains seem to like best. People are more comfortable with “robot-y robots” than ones that look human but move like a machine.

“Our brains are highly social,” Saygin said. People respond not just to a robot’s appearance and motion but also to its timing, for example, and whether a robot is behaving appropriately in context. We can’t assume what will work for humans. “Cognitive science and neuroscience need to understand how humans and robots interact,” she said. “We need empirically guided evidence to make engaging, acceptable robots.”

Teacher bots, robot drumming and more

After the talks, UC San Diego students and professors showed off their robotics technologies during a tech showcase.

MiP is the result of a collaboration between the UCSD Robotics lab and toy manufacturer WowWee.

Janelle Duenas, a graduate student representing professor Thomas Bewley's Coordinated Robotics Lab at the forum, showed off a self-balancing, educational robot kit with a spinning row of LEDs on its head, programmed to look like a Pac-Man. This Mobile Inverted Pendulum robot, or MIP, will soon be marketed. "The goal of these kits is to inspire and educate," Duenas said. "If a team builds and programs appropriately several of these kits, they'll be able to play games like Pac-Man with real 'bots running through a maze on the floor, giving future video games a compelling new physical component."

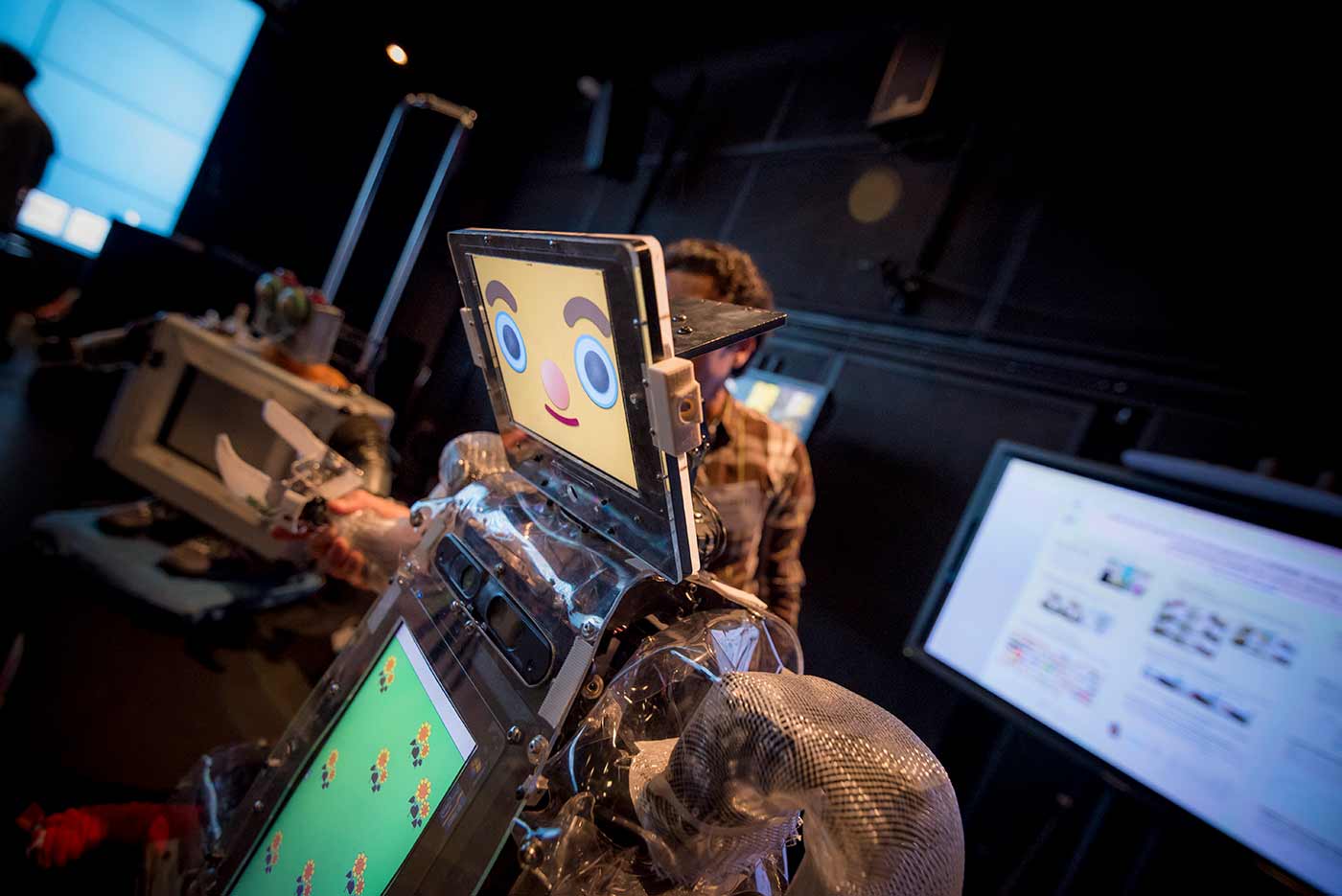

Cognitive scientist Andrea Chiba helped demonstrate RUBI, a robot that is part of decade-long, ongoing work at UC San Diego to better understand human-robot interaction. RUBI visits the university’s Early Childhood Education Center with cognitive science Ph.D. alumna Deborah Forster. The robot is helping to teach preschoolers letters, numbers and fun songs, and she’s also teaching researchers what it takes to engage with humans. Over the years, RUBI has evolved in response to the people she’s been interacting with, learning to recognize faces, for example, read emotions, and play a give-and-take game children seem to especially enjoy. All timed in such a way that the interaction is “natural” for our brains. Chiba and Forster also showcased a robotic rat called iRat, whose interactions with its biological counterparts are also giving insights into the social brain.

Nearby, a crowd lined up to put on a VR headset and look at micro- and nano-robots in action. These robots, developed in the lab of nanoengineering department chair Joseph Wang, in collaboration with labs run by professors Yi Chen and Liangfang Zhang, have many applications, including lithography (writing), circuit repair, swarming and drug delivery—even microcannons with nanobullets for gene silencing.

Meanwhile, the lab of Laurel Riek, associate professor of Computer Science and Engineering, showcased some of their work focusing on creating autonomous robots that can work alongside people. Tariq Iqbal, a Ph.D. student in the lab, creates algorithms to enable robots to be adaptive. He demonstrated this using drums: a Kinect sensor detected several people’s hand motions while they were pounding on a drum, and a robot drummed back, adapting in real-time to changes in tempo and rhythm. This research may one day help enable older adults and people with physical and cognitive impairments to experience an improved quality of life with adaptable, easy-to-use robotic helpers.

The lab focuses on creating autonomous robots that can work alongside people. Tariq’s project centers on creating algorithms to enable robots to be adaptive. He demonstrated this using drums: a Kinect sensor detected several people’s hand motions while they were pounding on a drum, and a robot drummed back, adapting in real-time to changes in tempo and rhythm. This research may one day enable older adults and people with physical and cognitive impairments to experience an improved quality of life with adaptable, easy-to-use robotic helpers.

"Robots need to understand the humans they're interacting with," said Iqbal. "We started by looking at how humans do it, and boiled the algorithm down to three parts -- perception, anticipation and adaptation."

Share This:

You May Also Like

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.