Firing Up Fire Detection Efforts with Deep Learning Models

‘SmokeyNet’ incorporates multiple datasets to result in nearly 14 percent increase in initial smoke detection

Story by:

Media contact:

Published Date

Story by:

Media contact:

Share This:

Article Content

Researchers at the San Diego Supercomputer Center (SDSC) at UC San Diego have developed new deep learning models to continue improving efforts for early wildfire detection. These efforts at SDSC are led by the WIFIRE Lab, which is an all-hazards knowledge cyberinfrastructure developed as a management layer from data collection to modeling. Recent research experiments integrated detailed on-the-ground, real-time camera footage, satellite-based fire detections and weather data to provide a multimodal approach to the early detection of wildfires.

Led by SDSC’s Lead for Data Analytics Mai H. Nguyen and UC San Diego Computer Science & Engineering Professor Garrison W. Cottrell, the team published their work in a paper titled Multimodal Wildland Fire Smoke Detection in Remote Sensing, MDPI.

The paper discusses the team’s utilization of deep learning models, which are artificial intelligence (AI) models that use multiple processing layers to learn representations of data at increasingly complex levels of abstraction. Using these representations, the model can detect patterns that can be used to make predictions. The models specifically presented in this research include the SmokeyNet baseline model, SmokeyNet Ensemble and Multimodal SmokeyNet extension.

The baseline SmokeyNet is a spatiotemporal model consisting of three different deep learning models: Convolutional Neural Network (CNN), Long Short-Term Memory (LSTM) and Vision Transformer (ViT). The SmokeyNet Ensemble, designed to merge the SmokeyNet baseline model’s image-based smoke predictions with weather data and satellite-based fire predictions, computes a weighted average of fire predictions obtained from processing these three data sources. Meanwhile, the Multimodal SmokeyNet, an extension of the SmokeyNet baseline model, directly integrates weather data with camera images.

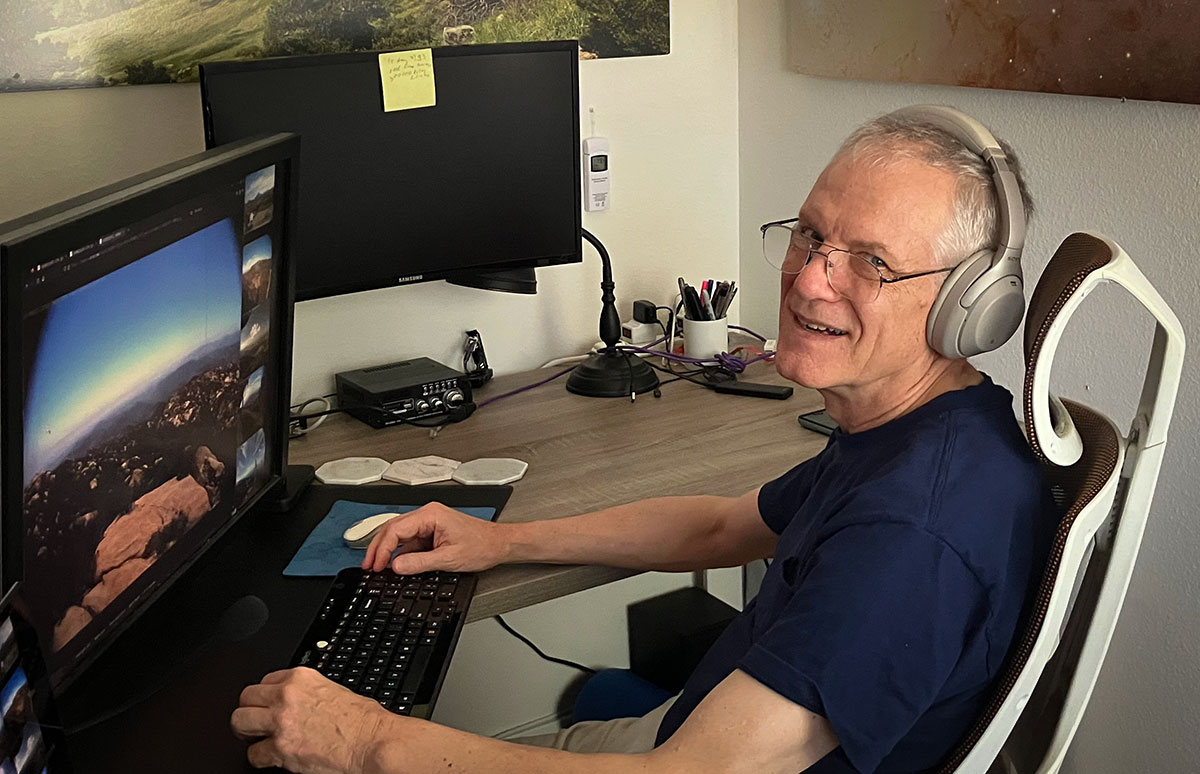

“We use the Fire Ignition images Library (FIgLib) dataset, which consists of about 20,000 images across geographically distinct terrains throughout southern California, as the source for the camera data,” said Jaspreet Bhamra, first author on the paper and former machine learning intern at SDSC. “These images were processed by SmokeyNet to detect wildfire smoke. We also use information regarding weather near the location of the images from three weather stations closely neighboring the cameras.”

The Fire Ignition images Library (FIgLib) was developed by HPWREN's co-founder Hans-Werner Braun, a research scientist at SDSC, and includes thousands of images throughout remote areas of San Diego County. FigLib was used as the camera image data source for SmokeyNet. Credit: SDSC

For the SmokeyNet Ensemble, fire detection data based on satellite images from the Geostationary Operational Environmental Satellite (GOES) system was also used; this data was designed to detect and characterize fire from biomass burning. Bhamra said that their results showed no notable advantage from the SmokeyNet Ensemble over the SmokeyNet baseline model. This indicated that the weather data and the GOES satellite data both served as weak signals. In contrast, the Multimodal SmokeyNet showed a distinct improvement concerning accuracy, F1, and time-to-detect in comparison to the SmokeyNet baseline model.

“A 13.6 percent improvement in time to detect initial smoke was reported, and F1 mean improved by 1.10 while standard deviation decreased by 0.32 on average for smoke detection performance, allowing us to conclude that the Multimodal SmokeyNet model was the most efficient and stable of the three models tested,” Bhamra said.

Next steps for the team’s work include expanding this work to additional fires and making use of unlabeled data to further improve performance.

“Specifically, we plan to analyze data from different geographical locations and camera types to extend the generality of our approach,” Nguyen said. “The issue of false positives, including low clouds, is also being examined in order to improve detection performance.”

Nguyen said that the team is also exploring methods to optimize the model’s compute and memory resource requirements for effective real-time smoke detection to assist with the battle again wildfires and related destruction.

This work was funded by the National Science Foundation (NSF) (grant no. 1541349) and San Diego Gas & Electric. Computational resources were provided by NSF (grant nos. 2100237, 2120019, 1826967 and 2112167).

Share This:

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.