Staying in the Loop: How Superconductors are Helping Computers “Remember”

Superconducting loops may enable computers to retain and retrieve information more efficiently

Story by:

Published Date

Article Content

Computers work in digits — 0s and 1s to be exact. Their calculations are digital; their processes are digital; even their memories are digital. All of which requires extraordinary power resources. As we look to the next evolution of computing and developing neuromorphic or “brain like” computing, those power requirements are unfeasible.

To advance neuromorphic computing, some researchers are looking at analog improvements. In other words, not just advancing software, but advancing hardware too. Research from the University of California San Diego and UC Riverside shows a promising new way to store and transmit information using disordered superconducting loops.

The team’s research, which appears in the Proceedings of the National Academy of Sciences, offers the ability of superconducting loops to demonstrate associative memory, which, in humans, allows the brain to remember the relationship between two unrelated items.

“I hope what we're designing, simulating and building will be able to do that kind of associative processing really fast,” stated UC San Diego Professor of Physics Robert C. Dynes, who is one of the paper’s co-authors.

Creating lasting memories

Picture it: you’re at a party and run into someone you haven’t seen in a while. You know their name but can’t quite recall it. Your brain starts to root around for the information: where did I meet this person? How were we introduced? If you’re lucky, your brain finds the pathway to retrieve what was missing. Sometimes, of course, you’re unlucky.

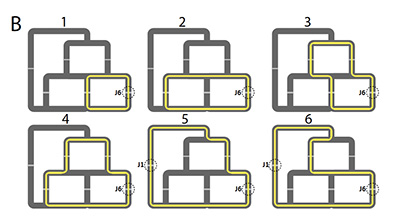

Distinct circulating current paths in a 4-loop network show the possible switching activity that allow flux to travel between loops. (cr: Q-MEEN-C / UC San Diego)

Dynes believes that short-term memory moves into long-term memory with repetition. In the case of a name, the more you see the person and use the name, the more deeply it is written into memory. This is why we still remember a song from when we were ten years old but can't remember what we had for lunch yesterday.

“Our brains have this remarkable gift of associative memory, which we don't really understand,” stated Dynes, who is also president emeritus of the University of California and former UC San Diego chancellor. “It can work through the probability of answers because it's so highly interconnected. This computer brain we built and modeled is also highly interactive. If you input a signal, the whole computer brain knows you did it.”

Staying in the loop

How do disordered superconducting loops work? You need a superconducting material — in this case, the team used yttrium barium copper oxide (YBCO). Known as a high-temperature superconductor, YBCO becomes superconducting around 90 Kelvin (-297 F), which in the world of physics, is not that cold. This made it relatively easy to modify. The YBCO thin films (about 10 microns wide) were manipulated with a combination of magnetic fields and currents to create a single flux quantum on the loop. When the current was removed, the flux quantum stayed in the loop. Think of this as a piece of information or memory.

This is one loop, but associative memory and processing require at least two pieces of information. For this, Dynes used disordered loops, meaning the loops are different sizes and follow different patterns — essentially random.

A Josephson juncture, or “weak link,” as it is sometimes known, in each loop acted as a gate through which the flux quanta could pass. This is how information is transferred and the associations are built.

Although traditional computing architecture has continuous high-energy requirements, not just for processing but also for memory storage, these superconducting loops show significant power savings — on the scale of a million times less. This is because the loops only require power when performing logic tasks. Memories are stored in the physical superconducting material and can remain there permanently, as long as the loop remains superconducting.

The number of memory locations available increases exponentially with more loops: one loop has three locations, but three loops have 27. For this research, the team built four loops with 81 locations. Next, Dynes would like to expand the number of loops and the number memory locations.

“We know these loops can store memories. We know the associative memory works. We just don’t know how stable it is with a higher number of loops,” he said.

This work is not only noteworthy to physicists and computer engineers; it may also be important to neuroscientists. Dynes talked to another University of California president emeritus, Richard Atkinson, a world-renowned cognitive scientist who helped create a seminal model of human memory called the Atkinson-Shiffrin model.

Atkinson, who is also former UC San Diego chancellor and professor emeritus in the School of Social Sciences, was excited about the possibilities he saw: “Bob and I have had some great discussions trying to determine if his physics-based neural network could be used to model the Atkinson-Shiffrin theory of memory. His system is quite different from other proposed physics-based neural networks, and is rich enough that it could be used to explain the workings of the brain’s memory system in terms of the underlying physical process. It’s a very exciting prospect.”

Full list of authors: Uday S. Goteti and Robert C. Dynes (both UC San Diego); Shane A. Cybart (UC Riverside).

This work was primarily supported as part of the Quantum Materials for Energy Efficient Neuromorphic Computing (Q-MEEN-C) (Department of Energy DE-SC0019273). Other support was provided by the Department of Energy National Nuclear Security Agency (DE-NA0004106) and the Air Force Office of Scientific Research (FA9550-20-1-0144).

Share This:

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.