Qualcomm Institute Internship Trains Students for Careers in the Sonic Arts

The program offers UC San Diego students hands-on experience in programming for creative impact

Story by:

Published Date

Article Content

There’s a unique space for bringing together music and technology at the UC San Diego Qualcomm Institute (QI). Called the Sonic Arts Research and Development Group, the laboratory designs new tools for immersive music and 3D soundscapes in extended reality.

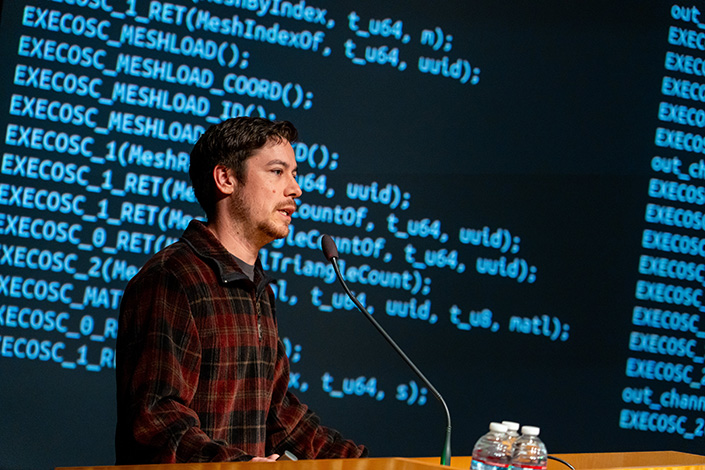

This year, Sonic Arts Director Shahrokh Yadegari, QI associate director and faculty member in the UC San Diego Department of Music, launched an internship, part of the QI California Workforce Development Program, that exposes UC San Diego students to cutting-edge techniques and prepares them for careers in music technology. The program’s first cohort recently showcased their projects in QI’s Atkinson Hall.

On the same playing field

The Sonic Arts lab has a history of mentoring students with diversified resumés. This year, its internship’s inaugural cohort included students specializing in fields such as mathematics, electrical engineering, cognitive and computer science.

For Interdisciplinary Computing in the Arts major Joel Martin, his lack of coding experience at first posed a daunting challenge. Yadegari and Grady Kestler, an alumnus of the Sonic Arts lab turned graduate student mentor, helped Martin find a project that involved making an existing music software program more accessible to those, like him, without a programming background.

The program, called Space3D, creates more lifelike soundscapes in extended reality, a term that includes virtual (VR) and augmented reality. If an ambulance drives past a person in VR, for example, the visitor should hear the siren in relative directions grow louder as the vehicle approaches, and quieter as it retreats.

Martin’s task was to create an interface for coding commands into Space3D through Pure Data, an open-source visual programming language invented in the 1990s by QI composer-in-residence Miller Puckette, a retired faculty member in the UC San Diego Department of Music. Martin used Pure Data to digitally recreate physical setups, including speakers, represented as line-drawn buttons on a screen.

Through Pure Data, Martin could easily change the speakers’ volume and manipulate other variables to affect how audience members perceived incoming sound. With a more intuitive means of coding in Space3D, researchers now had the option of programming Space3D remotely and transmitting it over the network into new spaces for audiences around campus.

“When audio tricks your brain, it’s like magic,” said Martin. “I would like to impart that magic to other people.”

Creating shared experiences

Xiaoxuan “Andrina” Zhang, Eduard Shkulipa, Helen Lin and Gabriel Diaz likewise worked over the summer to refine technology in ways that would create shared experiences for audiences. The students built on an algorithm that manipulated sound waves from different speakers arranged around a room.

Depending on the speakers’ settings, researchers could manipulate sound waves to either build on each other or cancel each other out. As a listener shifted positions around a room, they would therefore hear different conversations or music.

The students’ goal was to make this experience more fluid. When Zhang and Lin joined the Sonic Arts lab, the lab’s current algorithm was fixed, so that listeners would lose a particular strain of sound when they moved out of range. Zhang, Lin, Shkulipa and Diaz translated the algorithm’s primary programming language to Pure Data.

Through its members’ combined efforts, the team was able to create an interface that automated the process to the point that the students only needed to define a specific audio file for a soundbeam to follow the listener as they moved around the room. At the time of their showcase, the students were working on automating a camera function, so the speaker would be able to tell when a listener had moved.

Ultimately, Zhang and Lin said, they could see the technology being used in art galleries with a heavy musical or sound component. People visiting a museum installation might even be able to listen to translations of the same exhibit in different languages via a single speaker set-up.

Getting to this point, both Zhang and Lin said, involved intensive work and communication with teammates from different majors.

“Everyone had different ways of thinking,” said Lin, a third-year undergraduate double-majoring in mathematics and computer science. “Each one of us worked on a different part of the process, and there was a lot of communication making sure the output of one step was what the next person expected.”

Several other teams took on projects studying how the shape of the human ear and head influence how we perceive sound. Andrew Masek, working independently, and interns Ashwin Rohit, Poorva Bedmutha and Priyansh Bhatnagar all accepted projects that studied the manipulation of the way the ear processes sound to create more personalized audio.

Working with eight speakers, Masek put together new hardware to account for a person’s head and ear shape, as well as the distance and angle between the listener and speaker, to generate more spatialized sound.

Rohit, Bedmutha and Bhatnagar used smartphones to devise an easier and faster means of creating 3D models of a person’s head and torso. By studying these models using Space3D, the students could better analyze how an individual’s head and ear shape affect how they process sound. The research trends toward more personalized and immersive sound and music.

“For me, coming from a multidisciplinary background and having an interest in [many] fields…at every point, I found this project very exciting,” said Bedmutha, a Master’s student in the UC San Diego Jacobs School of Engineering’s Department of Electrical and Computer Engineering.

Interns Bryan Zhu and Lantz Darling also used Space3D to create real-time, two-way communications channels between people streaming 3D videos from real spaces through VR.

Programming for the arts

As part of the QI California Workforce Development Program, the Sonic Arts internship’s underlying goal is to give students hard skills for future careers in music technology and programming for the arts. But students like Martin have said that the experience also helped them develop evergreen skills, like the ability to break a large project into smaller and more manageable tasks.

“It made me realize I’m more capable than I thought I was, and I’ll take that going forward,” said Martin.

“This internship provides a unique interdisciplinary context with access to state-of-the-art technologies to develop the scientific, engineering and artistic skills of the students,” said Yadegari. “We hope that through this process the students are able to realize their potential in wider domains and are better prepared for their next professional or academic endeavors.”

The Sonic Arts internship is open to any UC San Diego undergraduate or graduate student interested in the intersection of sound, music and technology. Visit the Sonic Arts internship webpage to learn more.

Share This:

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.