Making Crowdsourcing More Reliable

Published Date

Article Content

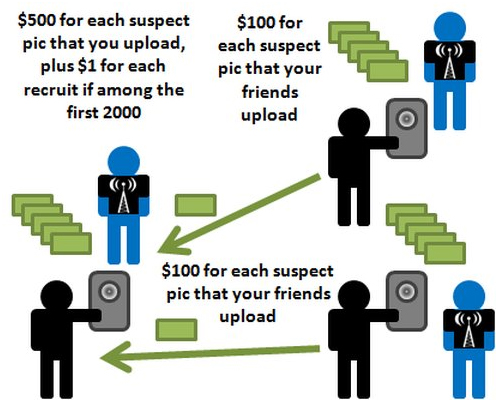

A diagram explaining the reward scheme Cebrian and colleagues used in the TAG Challenge.

From Wikipedia to relief efforts after natural disasters, crowdsourcing has become a powerful tool in today’s connected world. Now an international team of researchers including a computer scientist at the University of California, San Diego, report they have found a way to make crowdsourcing more reliable. They describe their findings in the Oct. 10 issue of the open access journal PLOS ONE.

It’s all about checking for accuracy, the researchers say. If the information submitted is not accurate, the source, and the person who recruited them, doesn’t get paid. “We showed how to combine incentives to recruit participants with incentives to verify information,” said Professor Iyad Rahwan of Masdar Institute in Abu Dhabi, a co-author of the paper. When a participant submits a report, the participant’s recruiter becomes responsible for verifying its accuracy. Compensations for the recruiter and the reporting participant for submitting accurate information as well as penalties for inaccurate or misleading reports ensure that the recruiter will check the information, the study showed.

“The methods we developed will help law enforcement and emergency responders,” said Manuel Cebrian, a computer scientist at UC San Diego and one of the study’s co-authors.

More reliable crowdsourcing will help apprehend criminals and find missing people, he said. It could also help find car bombs or kidnapping victims—quickly.

Manuel Cebrian is a research scientist at the Jacobs School of Engineering at UC San Diego.

The research team has successfully tested this approach in the field. Their group accomplished a seemingly impossible task by relying on crowdsourcing: tracking down “suspects” in a jewel heist on two continents in five different cities, within just 12 hours. The goal was to find five suspects. Researchers found three. That was far better than their nearest competitor, which located just one “suspect” at a much later time.

It was all part of the “Tag Challenge,” an event sponsored by the U.S. Department of State and the U.S. Embassy in Prague that took place March 31. Cebrian’s team promised $500 to those who took winning pictures of the suspects. If these people had been recruited to be part of “CrowdScanner” by someone else, that person would get $100. To help spread the word about the group, people who recruited others received $1 per person for the first 2,000 people to join the group.

Cebrian also was part of an MIT-based team that used incentives to solve the first crowdsourcing challenge of this kind, sponsored by DARPA, back in 2009. That team used crowdsourcing and incentives to locate 10 large red balloons at undisclosed sites all around the United States. During that challenge, about two-thirds of the reports the team received were false. To avoid that problem, the person who submits a report would be required to verify that it’s actually accurate. If they don’t, their compensation would be decreased by an amount proportional to the cost of verification, for example about $20 for a verification that takes an hour. People submitting a report would get docked if they don’t verify the information, regardless of whether it turns out to be correct.

“The success of an information gathering task relies on the ability to identify trustworthy information reports, while false reports are bound to appear either due to honest mistakes or sabotage attempts,” said Victor Naroditskiy of the Agents, Interaction and Complexity group at the University of Southampton, and lead author of the paper. “This information verification problem is a difficult task, which, just like the information-gathering task, requires the involvement of a large number of people.”

Of course, there are other approaches to ensure that crowdsourcing provides accurate information. Sites like Wikipedia have existing mechanisms for quality assurance and information verification. But those mechanisms rely partly on reputation, as more experienced editors can check whether an article conforms to the Wikipedia objectivity criteria and has sufficient citations, among other things. In addition, Wikipedia has policies for resolving conflicts between editors in cases of disagreement.

However, in time-critical tasks, when volunteers get recruited on the fly, there is no a priori reputation measure. In this kind of scenario, incentives are needed to carry out verification. The PLOS ONE paper shows such incentives are effective. “The results on incentives to encourage verification provide theoretical justification for the incentives used to win the Red Balloon Challenge,” Cebrian said.

Share This:

You May Also Like

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.