Are Racks-on-Chip the Future of Data Centers?

By:

- Doug Ramsey

Published Date

By:

- Doug Ramsey

Share This:

Article Content

George Porter

Increasing the scale and decreasing the cost and power of data centers requires greatly boosting the density of computing, storage and networking within those centers. That is the hard truth spelled out in the journal Science by faculty from the Jacobs School of Engineering at the University of California, San Diego.

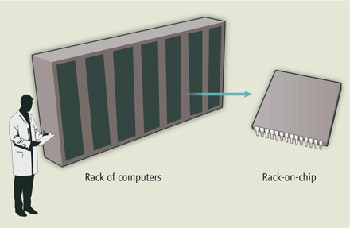

Writing in the Oct. 11 edition of Science, Electrical and Computer Engineering (ECE) Chair Shaya Fainman and Center for Networked Systems (CNS) Research Scientist George Porter – who is also a member of the Computer Science and Engineering systems and networking group – argue that one promising avenue to deliver increased density involves “racks on a chip.” These devices would contain many individual computer processing cores integrated with sufficient network capability to fully utilize those cores by supporting massive amounts of data transfer into and out of them.

In order to shrink data centers down to the size of a chip, a new data center network design is needed. “These integrated racks-on-chip will be networked, internally and externally, with both optical circuit switching (to support large flows of data) and electronic packet switching (to support high-priority data flows),” according to the article, “Directing Data Center Traffic.”

Shrinking data centers: Evolution of a data center design in which a rack of multiple discrete servers, including the top-of-rack network switch, is integrated into a single chip. Image credit: P. Huey/Science

Optical networks can deliver high bandwidth at low cost and power, however are not directly suitable for supporting the types of workloads present in data center networks. Indeed, the large-scale data centers supporting the growth of cloud computing must transmit data from server to server within the data center at bandwidths that are “orders of magnitude greater than their connections to end users.”

Industry is looking for ways to integrate optical networks densely within multicore processors, but Porter and Fainman – both of whom are affiliated with Calit2’s Qualcomm Institute at UC San Diego – argue that there are other challenges to overcome before that will be possible.

“Next-generation data center designs built with rack-on-chip designs will need to support both circuit and packet switching,” according to the Science article. Its co-authors make the argument that each processor in the rack-on-chip design must have a transceiver, which converts the electrical signals in the processing core with the optical photons that travel through fiber-optic cables – which would require shrinking them small enough to integrate with the rack-on-chip.

To prevent overheating, the transceivers need to be “low-power and highly efficient,” so that only a small number of photons traveling a short distance should be needed to represent a bit of information.

Shaya Fainman

Nevertheless, for all the recent advances in nanophotonics and silicon photonics, “the efficient generation of light on a silicon chip is still in its infancy,” noted the researchers, adding that they “may not be able to overcome the fundamental issues prohibiting efficient generation of light in indirect band-gap semiconductors.”

On the hardware side, there are plenty of roadblocks to achieving highly-scalable optical circuit architectures capable of supporting the many processor cores needed to sustain data center operations. One enabling solution could come from nonlinear metamaterials (given how difficult it is to transmit photons in natural materials). Nonlinear metamaterials would, in principle, be energy efficient. “Once this optical networking technology is integrated with electronic processors as a rack-on-chip design,” the article adds, “the number of such chips can then be scaled up to meet the needs of future data centers.”

According to Fainman and Porter, the technology could eventually deliver online applications to hundreds of millions of users, and enable big-data applications such as computational climate modeling.

How far in the future? Asked after the Science article’s publication, CNS’s Porter was realistic: “We’re excited about the potential of using cutting-edge photonic devices in data center networks, but the process of determining just what capabilities those devices should have, and then working with physics and engineers to actually build and integrate them, is a decade-long undertaking.”

Read the full Science article here.

Share This:

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.