SDSC, SDSU Share in $4.6 Million NSF Grant to Simulate Earthquake Faults

SDSC's New 'Gordon' Supercomputer to Assist in UC Riverside-led Project

By:

- Jan Zverina

Published Date

By:

- Jan Zverina

Share This:

Article Content

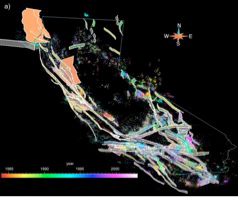

A perspective view of the Southern California Earthquake Center (SCEC) California Community Fault Model. Earthquake hypocenters are color coded by date. This model, with alternate representations of fine-scale details, will be implemented for the UCR project simulations. (Credit: Southern California Earthquake Center)

Researchers from the San Diego Supercomputer Center (SDSC) at the University of California, San Diego, and San Diego State University (SDSU) will be assisting researchers from six other universities and the U.S. Geological Survey (USGS) to develop detailed, large-scale computer simulations of earthquake faults under a new $4.6 million National Science Foundation (NSF) grant announced this week.

The computer simulations will use Gordon, SDSC’s innovative new supercomputer to officially enter production in January. The result of a five-year, $20 million NSF award, Gordon is the first high-performance supercomputer to use large amounts of flash-based SSD (solid state drive) memory. Flash memory is more common in smaller devices such as mobile phones and laptop computers, but unique for supercomputers, which generally use slower spinning-disk technology.

SDSC recently took delivery of Gordon’s flash-based I/O nodes and is providing access to early users for benchmarking and testing.

The five-year earthquake simulation project is being led by the University of California, Riverside (UCR), and also includes researchers from the University of Southern California (USC), Brown University, and Columbia University. Scientists will develop and apply the most capable earthquake simulators to investigate these fault systems, focusing first on the North American plate boundary and the San Andreas system of Northern and Southern California.

Such systems occur where the world’s tectonic plates meet, and control the occurrence and characteristics of the earthquakes they generate. The simulations can also be performed for other earthquake-prone areas where there is sufficient empirical knowledge of the fault system geology, geometry and tectonic loading.

“Observations of earthquakes go back to only about 100 years, resulting in a relatively short record,” said James Dieterich, a distinguished professor of geophysics in UCR’s Department of Earth Sciences, and principal investigator of the project. “If we get the physics right, our simulations of plate boundary fault systems – at a one-kilometer resolution for California – will span more than 10,000 years of plate motion and consist of up to a million discrete earthquake events, giving us abundant data to analyze.”

The simulations will provide the means to integrate a wide range of observations from seismology and earthquake geology into a common framework, according to Dieterich. “The simulations will help us better understand the interactions that give rise to observable effects,” he said. “They are computationally fast and efficient, and one of the project goals is to improve our short- and long-term earthquake forecasting capabilities.”

More accurate forecasting has practical advantages – earthquake insurance, for example, relies heavily on forecasts. More importantly, better forecasting can save lives and prevent injuries.

SDSC/UC San Diego researchers participating in this project include Yifeng Cui, director of the High Performance GeoComputing Laboratory at SDSC, and Dong Ju Choi, a senior computational scientist with the same laboratory. Other researchers include Steve Day and Kim Olsen from SDSU, David Oglesby and Keith Richards-Dinger from UCR, Terry Tullis from Brown, Bruce Shaw from Columbia, Thomas Jordan from USC, Ray Wells and Elizabeth Cochran from USGS, and Michael Barall from Invisible Software.

“The primary computational development for this effort is to enable an existing earthquake simulator developed at UCR to run efficiently on supercomputers with tens of thousands of cores,” said SDSC’s Cui, who recently participated in a project to create the most detailed simulation ever of a Magnitude 8.0 earthquake in California, whose related code will be used in the UCR project for detailed single event rupture calculations.

“Simulations on this scale have been made possible by concurrent advances on two fronts: in our scientific understanding of the geometry, physical properties, and dynamic interactions of fault systems across a wide range of spatial and temporal scales; and in the scale of available computational resources and the methodologies to use them efficiently,” said SDSU’s Day, a researcher specializing in dynamic rupture simulation. Day also noted that the project will enrich research opportunities for students in the SDSU/UCSD Joint Doctoral Program in Geophysics, an important focus of which is to advance the scientific understanding of earthquake hazards.

The overall earthquake simulation project will require tens of millions of supercomputer processing time, according to Cui, who plans to conduct some of their simulations on Gordon, slated to go into production in January 2012.

Capable of performing in excess of 200 teraflops (TF) with a total of 64 terabytes TB (terabytes) of memory and 300TB of high performance solid state drives served via 64 I/O nodes, Gordon is designed for data-intensive applications spanning domains such as genomics, graph problems, and data mining, in addition to geophysics. Gordon will be capable of handling massive databases while providing up to 100 times faster speeds when compared to hard drive disk systems for some queries.

Share This:

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.